Artificial Intelligence Security Threats and Countermeasures

AI: Revolutionizing Cybersecurity or Unleashing New Risks?

AI is not a new term in cybersecurity. It has been a part of cyber protection for quite some time. Artificial intelligence (AI) is a wide term that includes numerous subfields such as machine learning (ML), deep learning (DL), and natural language processing. These fields frequently overlap and complement one another.

Machine learning (ML) is a subset of artificial intelligence that employs algorithms to create prediction models. This has been the most frequently used field in cyber security, from the early days of User and Entity Behavior Analysis (UEBA) in 2015 to SIEM, EDRs, and XDRs, and the technology has stabilized, allowing us to detect behaviors and abnormalities on a large scale.

In that climate, ChatGPT 3.5 was launched in late 2022, and since then, particularly since mid-2023, there has been an unceasing discussion about how AI will transform cybersecurity. This is almost like hearing the same stories from the early days of UEBA and XDR. Almost every cybersecurity product provider offers or intends to offer a Generative AI-powered layer on top of their current product. There is a lot of misinformation about how AI, particularly when used by malicious actors, introduces new hazards and that we need AI to combat them.

Large Language Models, also known as LLMs, are intelligence systems that can analyze and produce text resembling human language. By leveraging learning methods and extensive training data these systems can. Generate natural language text, for a range of purposes such as chatbots, translation services, content creation, and other applications.

In this piece, we shall concentrate on this element.

Does Artificial Intelligence Increase Security Risks for Cyber-Defenders?

Short answer: Yes. However, in my opinion, a better question is whether AI poses a new risk to cyber defenders.

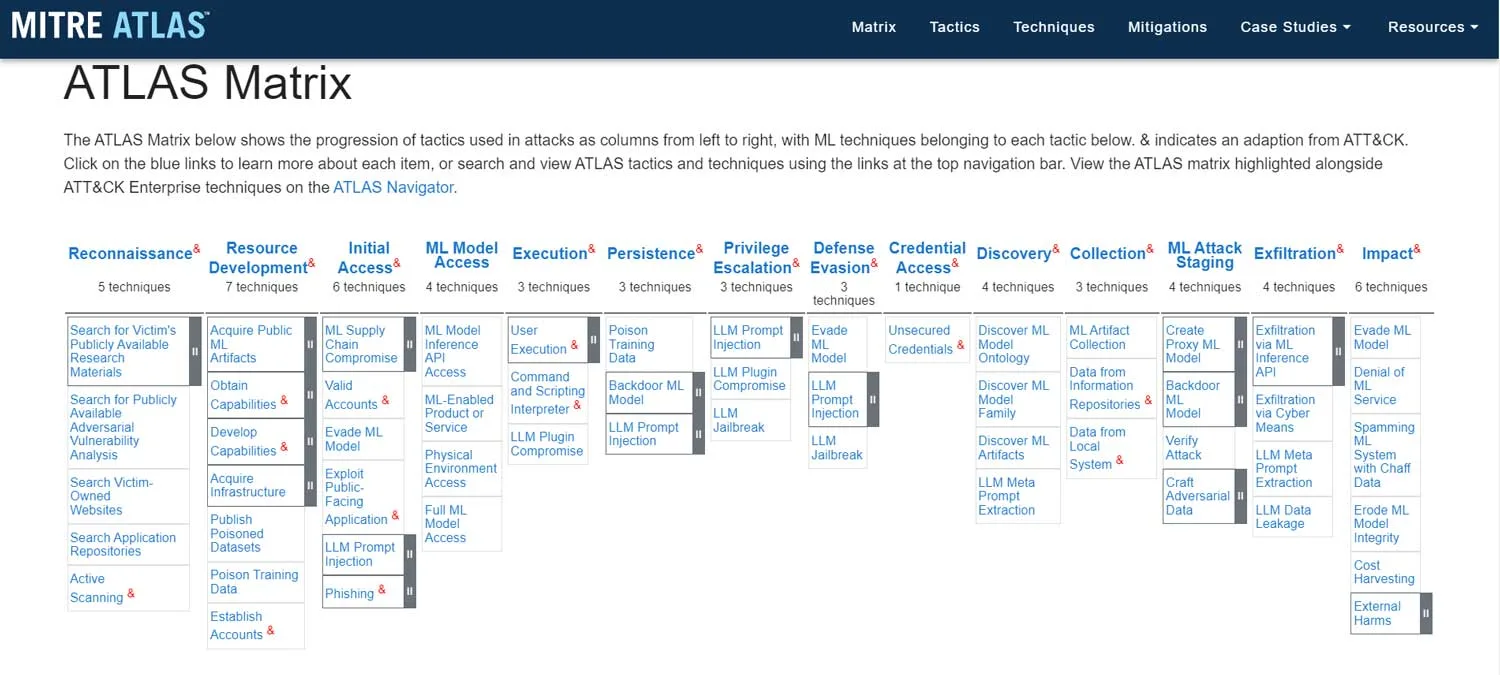

A great resource to understand AI risks is the MITRE ATLAS framework at https://atlas.mitre.org/matrices/ATLAS.

As shown in the diagram above, practically all the techniques, apart from ML Attack Staging, are similar to the ATT&CK enterprise matrix techniques (=traditional cyber-attacks). Even if we look at the strategies, the vast majority are related to attacks on LLMs themselves, making them incredibly relevant to LLM developers. What about LLM consumers? Do they share the same risks as developers? Can they do anything about it? Or what about the industry’s concern about bad actors leveraging LLMs? Does this information have any significant implications for the existing foundations of cyber defense?

According to security professionals, most of the problems associated with LLMs existed before LLMs, but the scale of attacks and efficiency of attackers have increased. Here are some of the risks:

- Attackers become more efficient when they use LLMs.

- Consumers inadvertently expose data in public LLMs through queries and uploads.

- LLM developers are concerned about the security and privacy of LLM technologies.

- Other issues, such as ethics, bias, and intellectual property.

- LLMs expand the attack surface.

Use of LLMs by Threat Actors

This field has the most FUD (Fear, Uncertainty, Doubt) in the business, with companies marketing AI-enabled solutions to combat bad actors.

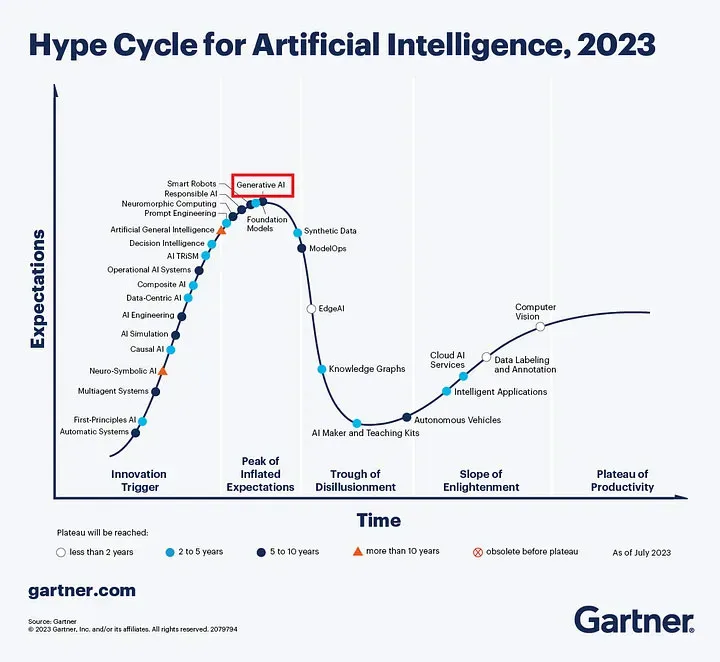

Looking at the most recent Gartner Hype Cycle for AI, we can see that Generative AI has reached its high, and the only route ahead is disillusionment.

Attackers are usually looking for the easiest route out. What’s the sense of doing the difficult thing if defenders still don’t understand the basics and attackers continue to have easy opportunities?

According to the X-Force research, the most common attack vectors are identity theft, phishing, and compromising public-facing applications. This theme is also present in other reports, such as Verizon DBIR and Mandiant M-Trends reports.

Is there an advantage for attackers who use LLMs over those who do not? Possibly. They could create better phishing emails with higher click rates, perform better social engineering, or produce dangerous malware faster. Do we need to adapt our defensive tactics? Not really, because even without LLMs and with lower click rates, the possibility of compromise remains. Even a single compromised account can escalate to a larger crisis due to lateral movement and privilege escalation, as evidenced by numerous real-world cases in recent years.

It is critical to retain the high maturity of existing controls while focusing on post-exploitation controls that can prevent and/or detect post-exploitation strategies such as lateral movement, persistence, privilege escalation, command and control, etc.

At the moment, getting fundamental cyber hygiene configuration right and ensuring the efficacy of existing controls should suffice. We should only be concerned with esoteric attacks after the basis is solid.

This may alter in the future as the AI becomes more capable and develops new assault vectors, but as long as the attack vectors remain the same, the defensive controls can remain the same, albeit more agile and faster.

Use of Public LLMs by Users

Data loss within organizations has always posed a threat with the rise of numerous public machine-learning models. This trend has opened avenues for enterprise users to inadvertently leak corporate information. While cybersecurity experts are well aware of these risks the average user may not be as vigilant.

A concerning issue arises when users unknowingly share data through chatbots or upload documents containing information for analysis. This poses a security risk.

Organizations often rely on Data Loss Prevention (DLP) Cloud Access Security Broker (CASB) Secure Services Edge (SSE) or Proxy solutions to monitor and regulate user activities. However, the emergence of Generative AI technology presents a challenge as many existing solutions lack categorization for Gen AI. Without this classification, it becomes increasingly difficult to keep track of the growing number of LLMs and the continuous influx of new ones each week.

Even if your current tools support Gen AI categorization it is important to recognize the benefits that this technology offers in addressing user challenges and enhancing efficiency. Therefore, it is crucial to ask questions such, as:

- Do we want to prevent all access to LLMs? This is arguably the simplest control to implement: block the Gen AI category of URLs in SSE/Proxy or other tools, as long as they support it. At the same time, we must be mindful of the possibility that we are impeding business growth. Whether we like it or not, Gen AI is an enabler, and businesses will undoubtedly seek to exploit it for increased efficiencies. If the environment is regulated and such use is prohibited, the decision becomes easy. Otherwise, we should be flexible in our approach.

- If not blocking, then what? Use a risk-based approach. Perhaps people entering their prompts and receiving responses is acceptable but pasting data/scripts or uploading documents is not. Perhaps we can enable the use of public LLMs while blocking any paste or upload actions with SSE/DLP or something similar. This can be developed further. Perhaps we should enable pasting or uploading data while blocking sensitive info. This necessitates that the organization has developed data protection rules and the ability to identify sensitive data.

- Can we supply an authorized LLM for the enterprise? While the majority of LLMs are public and free to use, providers such as OpenAI, Google, and Microsoft have begun to offer premium and enterprise versions. One such example is Microsoft Copilot (previously Bing Chat Enterprise), which is included with practically all M365 and O365 SKUs for free. It utilizes the well-known GPT 4 and DALL-E 3 LLMs. When using a Work or School account, it offers commercial data protection. Commercial data protection means that both user and organizational data are secured. Prompts and responses are not saved, Microsoft has no direct access to the chat data, and the underlying LLMs are not trained using the inputs. This could be a suitable choice for organizations using M365 or O365, as well as analogous solutions for non-Microsoft stacks. If your budget allows, there’s also Copilot for Microsoft 365 which has access to the same internal documents and files that a user has and is a very powerful productivity enhancer.

The final solution can be a win-win situation, giving users something that increases their productivity while also providing them with new and fascinating technology to play with, but banning all other LLMs. We still need to train users on the hazards of Intellectual Property protection, mistakes, and submitting sensitive data.

The Attack Surface of Large Language Models

In the business sector, as opposed to consumer solutions, LLMs are meant to solve an issue using enterprise data, which means users ask questions or provide insights based on corporate data. Invariably, such LLMs will have several connections with inputs, data sources, data lakes, and other business applications.

Attackers are noted for their creativity in using current capabilities to evade defenses. Living off the Land Attacks are widely documented. Can we expect a Living of the LLM attack in the future? Access control and permission across the entire stack, including interfaces and APIs, will be critical. As companies speed up the deployment of LLMs and connect them with organizational data, cyber defenders will need to step up and devise tactics to prevent misuse or abuse of this new attack surface.

Countermeasures and the Use Case of LLMs in Cybersecurity

If you’ve gotten this far, you’re probably wondering if there are any benefits to LLMs in cyber security. The quick answer is yes, but considerably less than the present noise that surrounds this industry. Let us look at some of the use scenarios that can truly help cyber defenders:

- SOC teams have traditionally faced challenges in quickly and accurately assessing incidents. LLMs can help enhance this process to some extent. By analyzing volumes of data adding context to alerts, evaluating severity levels and recommending actions LLMs offer valuable support. When SOC analysts ask questions in plain language LLMs can translate them into more complex queries, retrieve relevant data and present it in an easily understandable manner. This trend towards leveraging AI technologies is evident in the cybersecurity industry with the emergence of solutions like Microsoft Copilot for Security and various other AI chatbots integrated within EDR/XDR/CNAPP offerings.

- While the capability appears to be encouraging, it also poses risks. Without appropriate grounding, LLMs are prone to distorted perceptions, and a SOC Analyst, who may not have a lot of experience, is more likely to trust the output of an LLM. What if it’s wrong? While there is a rush to incorporate LLMs into every product, as customers, we must be mindful that the benefits of standalone products will be small, and the true benefits will come from solutions with access to huge data lakes such as SIEM or XDR. If the solution landscape is fractured, you may wind up with numerous LLMs embedded in each solution, putting us back at square one, with the human needing to correlate them all.

- Potential Threat Hunting. This could be the most important use case, but the world has yet to see a model capable of doing threat hunting. A model that may assist the SOC Analyst in hunting by transforming natural language questions to complicated SIEM/XDR query language will greatly reduce fatigue and make life easier.

- SecOps Automation. LLMs are similar to advanced SOAR playbooks in that they can contextualize an alarm and perform or recommend specific remedial actions. The capacity to develop bespoke playbooks on demand for each alert can significantly increase productivity. However, given their proclivity for hallucinations, it will take a courageous company to permit LLMs to execute responsive actions. This use case will still require a human in the loop.

To properly protect your healthcare environment, use Hornetsecurity Security Awareness Service to educate your employees on how to secure your critical data.

To keep up with the latest articles and practices, visit our Hornetsecurity blog now.

Conclusion

Gen AI has evolved at a rapid and astounding rate. Since the debut of ChatGPT, various revisions of Gen AI technology have been published at a rapid pace. It’s hard to believe that it’s only been over two years since ChatGPT’s original debut in November 2022, and we’re already seeing multiple multi-modal (being able to work with text, audio, images and video) LLMs with varied capabilities.

While the cyber security community was still working to define XDR, we now have a new vector to consider. Threats may be handled today with existing processes and technologies, but the future will undoubtedly be different. While the impact of AI in cybersecurity has a bright future we expect to see AI-powered autonomous attacks with enhanced defense evasion in the coming years, which will be a significant issue.

FAQ

In brief, the answer is yes. Nevertheless, the appropriate query would revolve around whether AI introduces threats, for those safeguarding against cyber-attacks. AI brings about hurdles such as decision-making and privacy apprehensions. It is essential to embed equity and openness into AI algorithms to address these risks effectively.

LLMs come with risks such as making it easier for attackers to operate efficiently users unknowingly exposing data, security and privacy worries for LLM creators, and ethical dilemmas like bias and intellectual property concerns. Furthermore, LLMs broaden the range of cyber threats creating hurdles for cybersecurity defenses.

Certainly, there are advantages to consider. We should proceed with care. Large Language Models (LLMs) have the potential to aid Security Operations Center (SOC) teams, in evaluating incidents, conducting threat hunts, and automating security operations. Nevertheless, if not properly supervised and guided LLMs could lead to mistakes and issues of trust. It is essential to assess the advantages and drawbacks of incorporating LLMs into cybersecurity procedures.