by Luke Orellana | Sep 27, 2018 | Managed Service Providers

KeePass is one of the most widely used password management tools used today in IT. It’s simple to use and secure, which meets the needs of most businesses. Also, the fact that it’s open source goes a long way for the community to trust its code and ensure it’s not stealing customer data.

It is one of the top free tools an MSP can use for password management. To top it all off, a PowerShell module is available for automating and managing KeePass databases. This is incredibly useful for MSPs as they usually have a tough time tracking all the passwords for their clients and ensuring each engineer is saving the passwords to KeePass for each project.

Automating an ESXi host’s deployment, generating a new password, and then automatically storing it without any human intervention is a godsend. I can’t count how many times I’ve run into an issue where a password was nowhere to be found in KeePass or was typed incorrectly into KeePass. Quite simply, if you’re an MSP managing a large number of systems, you need KeePass, and you need to automate it. Here’s how.

Getting Started Automating with KeePass

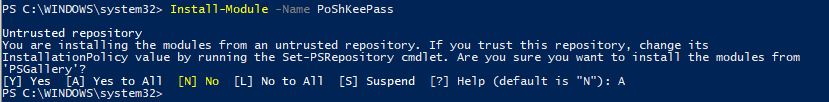

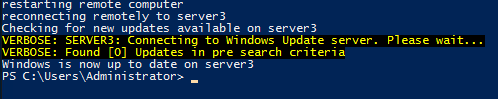

If you don’t have KeePass, you can download it here. Also, you can get the module from the PowerShell Gallery here. Or on a Windows 10 machine open up an administrative command prompt and type:

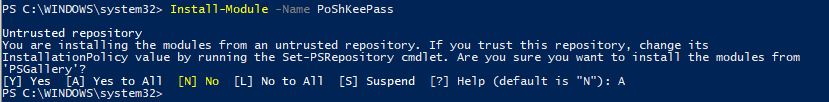

Install-Module -Name PoShKeePass

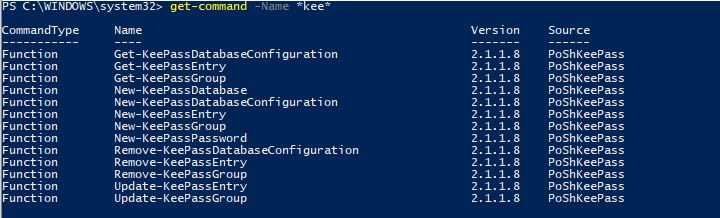

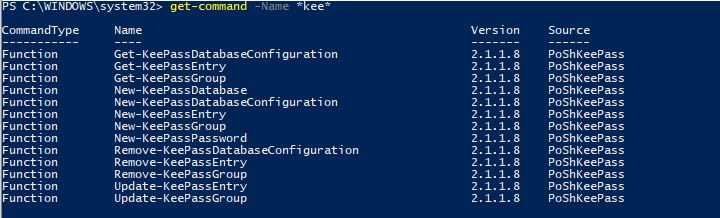

Now when we run a quick Get-Command search, we can see we have our new KeePass functions and we’re ready to go!:

Now when we run a quick Get-Command search, we can see we have our new KeePass functions and we’re ready to go!:

get-command -Name *kee*

Connecting With KeePass

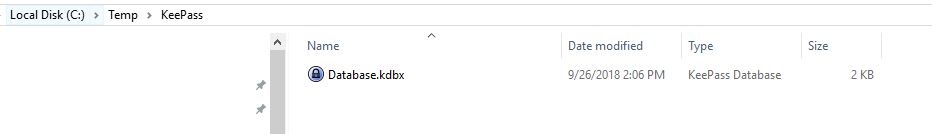

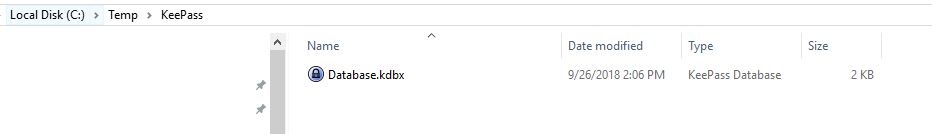

First, we need to establish our connection to our KeePass database. I have a KeePass database created in a folder, notice the .kdbx file extension which is the database extension used for KeePass:

Now we will use the New-KeePassDatabaseConfiguration cmdlet to set up a profile for the connection to the .kdbx file. We will also use the -UserMasterKey parameter to specify that this KeePass database is set up to use a Master Key. There several different ways of configuring authentication to a KeePass database, but for the purpose of this demo we are going to make it simple and use a MasterKey which is just a password that is used to access the database:

Now we will use the New-KeePassDatabaseConfiguration cmdlet to set up a profile for the connection to the .kdbx file. We will also use the -UserMasterKey parameter to specify that this KeePass database is set up to use a Master Key. There several different ways of configuring authentication to a KeePass database, but for the purpose of this demo we are going to make it simple and use a MasterKey which is just a password that is used to access the database:

New-KeePassDatabaseConfiguration -DatabaseProfileName 'LukeLab' -DatabasePath "C:TempKeePassDatabase.kdbx" -UseMasterKey

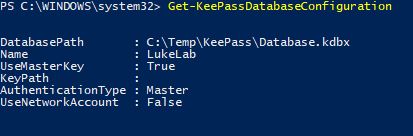

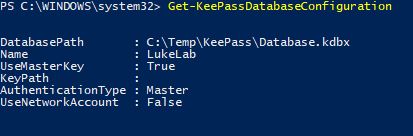

If I run Get-KeePassDatabaseConfiguration I can see my database profile is set up and points to the .kdbx:

If I run Get-KeePassDatabaseConfiguration I can see my database profile is set up and points to the .kdbx:

Generating Passwords with KeePass

One really cool feature of the KeePass PowerShell cmdlets is that we can use KeePass to generate a password for us. This is very useful when setting unique passwords for each server deployment. This can be done with regular PowerShell coding, however, the KeePass modules allow us to generate a password in 1 line and on the fly without any extra coding. We will create our password variable and use the New-KeePassPassword cmdlet to generate a password. The parameters are used to specify the type of password complexity that we want:

$password = New-KeePassPassword -UpperCase -LowerCase -Digits -SpecialCharacters -Length 10

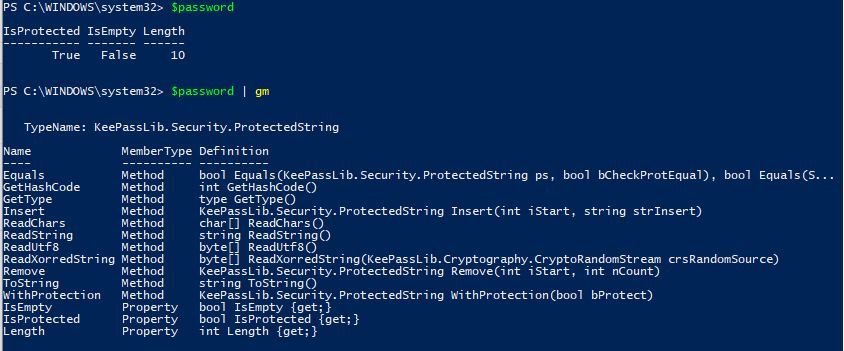

Now when we inspect our $password variable we can see that its a secured KeePass object:

Now when we inspect our $password variable we can see that its a secured KeePass object:

Now let’s upload a new entry to our KeePass database. Let’s say we are deploying an ESXi host and want to generate a random password and save it to our KeePass database. We will use the New-KeePassEntry cmdlet and specify our profile “LukeLab” that we set up earlier. We are also going to use our $password variable as the password profile that we want to use for the password complexity requirements. Then we get prompted for our Master Key password:

Now let’s upload a new entry to our KeePass database. Let’s say we are deploying an ESXi host and want to generate a random password and save it to our KeePass database. We will use the New-KeePassEntry cmdlet and specify our profile “LukeLab” that we set up earlier. We are also going to use our $password variable as the password profile that we want to use for the password complexity requirements. Then we get prompted for our Master Key password:

New-KeePassEntry -DatabaseProfileName LukeLab -Title 'ESXi1' -UserName root -KeePassPassword $password -KeePassEntryGroupPath Database/ESXi

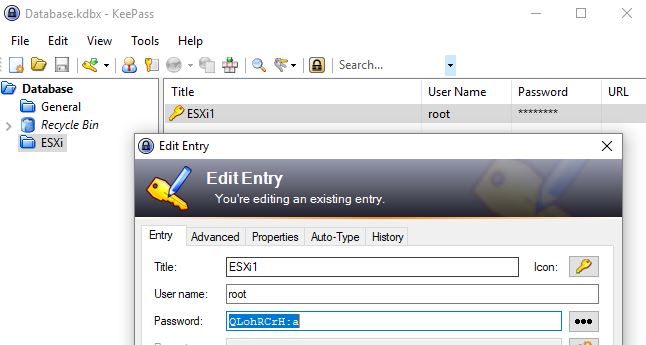

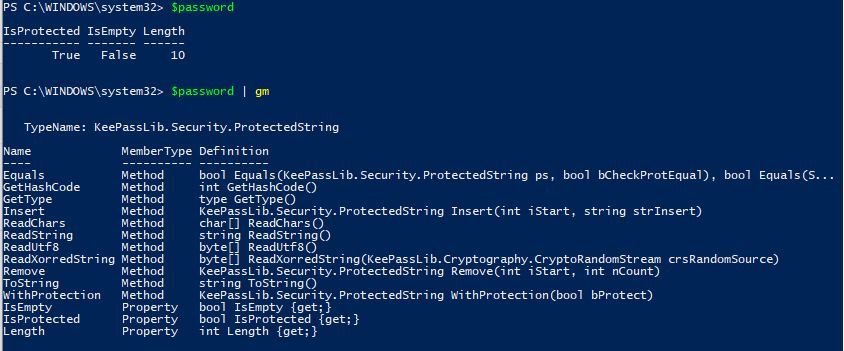

Now, when we open up our password database we can see our new entry and a randomly generated password:

Now, when we open up our password database we can see our new entry and a randomly generated password:

Updating A KeePass Password

Password rotation is now becoming a normal standard within IT. Security is bigger than ever, and the need to change passwords every so often has become a necessity. Luckily, with KeePass and PowerShell, we can create scripts that automate the process of changing our ESXi host password and then update the new password in KeePass. We start by collecting the current KeePass entry into a variable by using the Get-KeePassEntry cmdlet:

$KeePassEntry = Get-KeePassEntry -KeePassEntryGroupPath Database/ESXi -Title "ESXi1" -DatabaseProfileName "LukeLab"

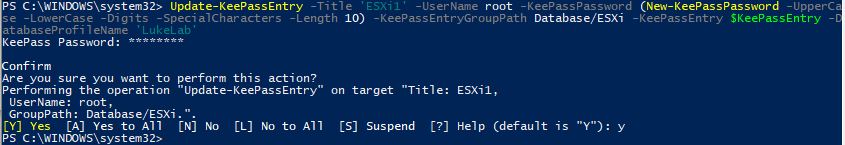

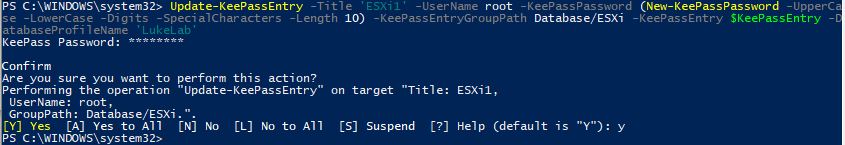

Next, we use the Update-KeePassEntry cmdlet to update the entry with a new password.

Next, we use the Update-KeePassEntry cmdlet to update the entry with a new password.

Update-KeePassEntry -Title 'ESXi1' -UserName root -KeePassPassword (New-KeePassPassword -UpperCase -LowerCase -Digits -SpecialCharacters -Length 10) -KeePassEntryGroupPath Database/ESXi -KeePassEntry $KeePassEntry -DatabaseProfileName 'LukeLab'

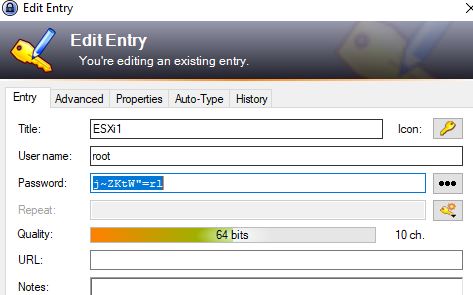

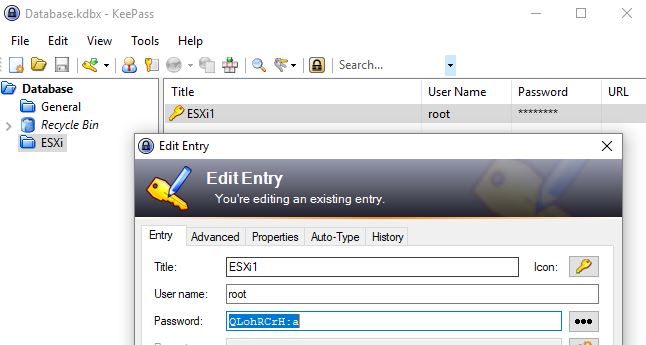

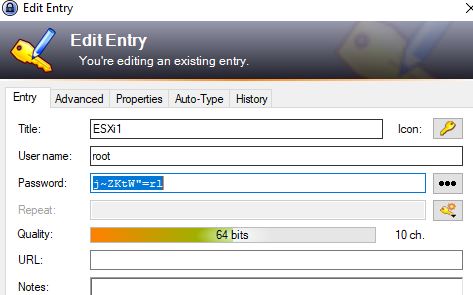

Now when we look at our password entry for ESXi1 we can see it has been updated with a new password:

Now when we look at our password entry for ESXi1 we can see it has been updated with a new password:

Now let’s update our ESXi system by obtaining the secure string from the new Entry and changing the password on the ESXi Host.

We save our entry to a variable again:

Now let’s update our ESXi system by obtaining the secure string from the new Entry and changing the password on the ESXi Host.

We save our entry to a variable again:

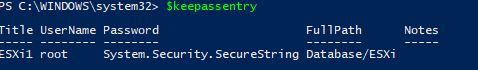

$KeePassEntry = Get-KeePassEntry -KeePassEntryGroupPath Database/ESXi -Title "ESXi1" -DatabaseProfileName "LukeLab"

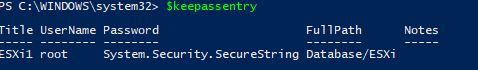

Now we have our password as a secure string. If we look at the properties using $keepassentry we can see the secure string object is there:

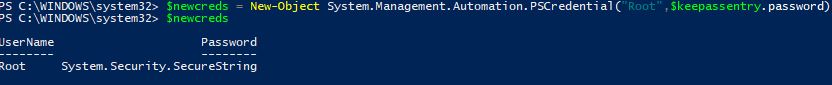

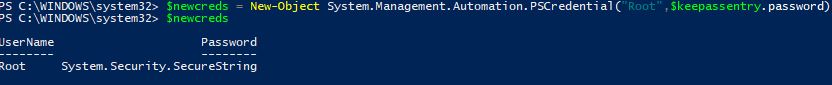

So now we can use this variable to create a credential object and pass that along to a script and change the ESXi password to this new password:

So now we can use this variable to create a credential object and pass that along to a script and change the ESXi password to this new password:

$newcreds = New-Object System.Management.Automation.PSCredential("Root",$keepassentry.password)

And, just to prove it, I can use the GetNetworkCredential method and show the decoded password is the same as that is in our KeePass:

And, just to prove it, I can use the GetNetworkCredential method and show the decoded password is the same as that is in our KeePass:

Wrap-Up

This is a very powerful module as it allows IT Pros to automate the way they manage and enter passwords. There are many more capabilities than the KeePass module allows us to do, and the module author is expanding on more and more advanced features. This is an exciting time to be in IT, where we are able to use an assortment of PowerShell modules to accomplish feats that were nearly impossible only a few years ago.

What about you? Do you see this being useful for your engineers? Have you used KeePass for password tracking before?

Thanks for reading!

by Luke Orellana | Aug 17, 2018 | Managed Service Providers

This is a great time to be in IT! With the rate of emerging projects on GitHub, we are seeing an explosion of projects and a mass collaboration with the community. As an MSP that uses PowerShell to automate its processes, one of the emerging projects worth taking a look at is the PowerShell Universal Dashboard.

This is essentially a collection of modules that allows one to create Web GUI Dashboards within a matter of minutes. This is an extremely useful tool and can be heavily utilized with your organization’s 3rd party application APIs to create a unified tool for your MSP.

How to install the PowerShell Universal Dashboard

We need to update PowerShellGet to version 1.6 before we can download and install the Universal Dashboard.To check the verison installed on your server/workstation type:

gcm -module powershellget

If the version listed there is not 1.6 then open up an administrative PowerShell console and type in the following to upgrade:

install-module powershellget -Force

When we run the command again we can see that the module is now upgraded to 1.6:

There are now two editions of the Universal Dashboard. The edition that costs money to run is the ” Enterprise Edition”, which must be licensed or it will only run for 1 hour. To install open up an administrative PowerShell and type in the following command to install the module:

There are now two editions of the Universal Dashboard. The edition that costs money to run is the ” Enterprise Edition”, which must be licensed or it will only run for 1 hour. To install open up an administrative PowerShell and type in the following command to install the module:

Install-Module -Name UniversalDashboard -RequiredVersion 1.7.0

The 2nd edition available is the Community Edition. This version is free, however there are some features that are not available in this version such as branding of the dashboard and Rest API authentication. To install this version open up an administrative PowerShell console and type in the following:

Install-Module UniversalDashboard.Community -AllowPrerelease

Note: you must have .Net Framework 4.5 installed in order to use PowerShell Get and Run the Universal Dashboard. If you don’t have it installed, get it here.

Getting Started with the PowerShell Universal Dashboard

Once you have the modules installed, you can easily jump into developing your own Dashboard website. The beauty of the Universal Dashboard is that it is built on .net core which means that our dashboards that we create can be run virtual ANYWHERE. We can run them as a container, IIS, as a service on windows, and even right from the command line if we wanted to. To get started, lets create a simple dashboard by typing in the following syntax:

$homepage = New-UDPage -name Homepage -Content {

New-UDCard -Title "This is our Test Card"-Text "I just created this card with the new-udcard cmdlet"

}

$testDashboard = New-UDDashboard -Title "This is our awesome dashboard" -page $homepage

What we did was create a homepage using the New-UDPage cmdlet, we named it Homepage and used the -content parameter to put content into the page itself. In the -Content scriptblock we have the New-UDCard parameter which allows us to create a card on the page and input text into that card.

Next we need to create a dashboard using the New-UDDashboard cmdlet. We use -Title to give our dashboard a title at the top and then specify which pages we want the dashboard to include with the -page parameter and specify our $homepage variable that we saved our homepage as.

Now that we have our dashboard created with pages specified. Next we need to “start” our dashboard. We can do this by adding the Start-UDDashboard cmdlet to the end of our script and specify the $testDashboard dashboard that we created above. I’m also specifying below that port 1000 is used to access this dashboard, which you will see how that works in our next steps below.:

Start-UDDashboard -Dashboard $testDashboard -port 1000

Now we can run our entire script either in ISE, PS console, or in my case Visual Studio Code. Then to see the results our our code, we can open up a browser and go to http://localhost:1000. Now we can see the dashboard that we just created:

Now we can run our entire script either in ISE, PS console, or in my case Visual Studio Code. Then to see the results our our code, we can open up a browser and go to http://localhost:1000. Now we can see the dashboard that we just created:

So in as little as 5 minutes we already have a web hosted GUI running. To stop running this dashboard, we can type in the following command:

So in as little as 5 minutes we already have a web hosted GUI running. To stop running this dashboard, we can type in the following command:

get-uddashboard | stop-uddashboard

Going Further With the PowerShell Universal Dashboard

This is just a very basic tutorial on the PowerShell Universal Dashboard intended to show how it works and how quickly you can get a dashboard-styled website up and running. All we did was make one page that has a card full of text. There are many more cmdlets and features that we can include on our dashboard pages. Check out this site to get an idea, it’s incredible.

The possibilities are limitless with this new framework for quickly creating web GUIs with PowerShell. We could create charts to monitor a specific application or server, or we could even add in authentication to provide privileged access to our dashboards.

We can also use the Web UI as a front end to run complex PowerShell scripts. Additionally, now that the PowerShell Universal Dashboard has a community edition, we are going to start to see some really cool projects emerge. With PowerShell and application APIs, this tool can be used to create monitoring dashboards of almost anything imaginable. I believe we are just barely scratching the surface with this module.

This post is part of a series about PowerShell tools for MSPs. Why not have a look at the other entries in the series?

Building PowerShell Tools for MSPs: Automating Windows Updates

Building PowerShell Tools for MSPs: HTML Tables for Reporting

Building PowerShell Tools for MSPs: Using SFTP

Building PowerShell Tools for MSPs: Working with REST APIs

Building PowerShell Tools for MSPs: Advanced Functions

by Andy Syrewicze | Jul 19, 2018 | Managed Service Providers

As an MSP, you’ll always look for ways to increase profits, and many MSPs looking at the prices charged by Cloud services such as AWS, Azure, and Google may be tempted to bypass them and develop their own cloud service for their customers. This article explains why you shouldn’t.

Cloud vs On Premise

It’s a cloud-first world these days, right? Many Managed Service Providers are jumping on the cloud hype train and with good reason! The benefits of cloud technologies are numerous and can be game-changing for businesses and the MSPs working with them to manage IT. It seems like an inevitable thing that most services run by an MSP will eventually move from on-premise to the cloud.

However, from an MSP’s perspective, the question comes down to the “how” of cloud services. How are you going to provide the benefits of “the cloud” to your customers? This often breaks down to who’s cloud you are going to use. Azure? AWS? Google? Many MSPs start looking into these platforms and are often surprised by the cost of consuming services on these publicly available platforms. This often sends them to the thought process of “Well, I’ll just build my own cloud.” I’m here today to tell you why that’s a bad idea. It’s a TERRIBLE idea, and I’m going to tell you why.

I get it. I really do. I’m a technical guy myself, and I’ll be the first person to try to engineer my way to some cost savings but trust me on this one. It may sound like a good idea, but it’s not, and I’m going to tell you a few reasons why. Moreover, I’m going to compare building a cloud yourself with some examples of the solutions provided by Microsoft Azure simply because that is the public cloud offering that I’m most familiar with myself.

There are several cloud hosting options available. However, the reasons why building your own cloud solution is a bad idea are the same regardless of which you prefer.

Reason 1 – Building a Cloud is Complicated Business

Cloud services can be wildly complex. You don’t need me to tell you that. The problem comes when we technical guys see it as a challenge. I’m going to quote the famous Ian Malcolm from the movie Jurassic Park here: “You were so preoccupied with whether or not you could, you didn’t stop to think if you should.” The quote is very applicable here.

Sure, you could likely put together a highly redundant cluster that serves up VMs or hosted services to your customers. You could then likely assemble some VPN solutions or MPLS configurations to connect them. You could make a highly capable storage mesh and failover capabilities. The list goes on. I would bet money you’ll still miss something in your planning because that would miss something as well. Making your own cloud is complicated, and there are many bases to cover.

Let’s then say that, sure, maybe you got it right. Maybe you did cover your bases. What happens when you have to manage the platform on an ongoing basis? Let’s focus on one common management task as an example. What about a patch cycle? You have to patch the OS of the core components, hardware components, firmware, switch infrastructure, storage hardware…etc…etc.

Who is testing those patches in an identical environment before rolling it out to production? Who is making sure all those patches work out together? Are you going to eat the man-hours needed to do that properly? Will someone be standing by to install zero-day patches at the drop of a hat? If I’m a customer of your cloud services, I expect you to do all of the above. What am I going to do if that’s not the case?

These are just some of the complexities of attempting to build and maintain your own cloud. It is by no means a comprehensive list, but you get the idea.

Reason 2 – Planning for Failure at Scale Sucks

What about when things break? Do you have a plan to go over all those workloads elsewhere in the event that’s needed? Do you have regularly scheduled DR drills? Do you provide your customers the ability to do this themselves?

Things are going to break. There is going to be downtime for single failure domains in your cloud for things like patching, hardware replacement, and unplanned issues. How do you protect those consuming your cloud from those types of operations? Does your solution to this problem failover the entire cloud or just individual customers? Is it seamless?

If those seem like difficult questions to answer, that’s because they’re meant to be difficult questions. Your customers are depending on you to provide these services. Some of your customers may not be able to EVER tolerate an outage, so your cloud needs to address these types of situations and/or provide the customer a mechanism to do it themselves. To me, that’s a bare-bones capability of a modern-day cloud.

For example, Microsoft provides a number of capabilities designed to address the availability needs of their customers. Availability groups in Azure address patching and hardware maintenance concerns. Azure’s cloud storage has the ability to keep copies of data in multiple regions. Microsoft provides you with multiple mechanisms for dealing with failures and disasters in Azure, and in the event that they fail to do so for some reason, they have money-backed SLAs. Does your cloud do that?

Reason 3 – Does Your Datacenter Reach World-Wide? Didn’t Think So…

Speaking of availability, does your cloud footprint reach worldwide? Let’s say I’m a manufacturer that sells worldwide. Would I want my website hosted in a single location? What if I want to host a web app that allows my customers to log in and purchase from everywhere? Would I want that on a web server in my own datacenter or distributed across multiple datacenters and multiple geographies to allow for natural disasters?

Most MSPs I’m aware of don’t have that kind of footprint. Yes, there are some with multiple datacenters, but you can’t compete with the likes of Microsoft Azure and the others. As of the time of this writing, Microsoft Azure has 60+ regions worldwide and is available in 140 countries. If you’re an MSP offering cloud services through a major provider like Microsoft, this will allow you to provide an unprecedented array of geographic offerings for your customers. That’s simply something your own datacenter(s) would not allow.  *Above Image from azure.microsoft.com

*Above Image from azure.microsoft.com

Reason 4 – The Big Guys Just Have Some Things That You Don’t. Accept it

Now, I’ve mentioned the above reasons so far, and I’d like to point out that these are the more direct reasons why you shouldn’t attempt to build your own cloud. However, there are many, many more. While the big players, like Microsoft, AWS, and Google, have figured out all I’ve mentioned above, there is one final point I’d like to leave you with as you’re weighing this decision.

The brutal fact is they can do some stuff in their respective stacks that you can’t and won’t be able to in a self-made cloud. I could go into detail, but I think the list of services provided by Microsoft Azure will get the point across. Can your cloud do all of this?

Reason 5 – Security and Compliance is a Full-time Job

And then there’s the ever-daunting task of ensuring your cloud environment is secure and compliant. Security isn’t just a feature; it’s an ongoing battle against ever-evolving threats. The big cloud providers invest millions into security research, dedicated teams, and advanced AI-driven security solutions to protect against data breaches, unauthorized access, and other cyber threats. They also constantly update their platforms to adhere to the latest compliance standards across various industries worldwide. Can you afford the same level of commitment and resources?

Furthermore, consider the responsibility you’re taking on. When you build your own cloud, you’re not just managing data – you’re responsible for protecting it against all forms of cyber threats and ensuring it meets all regulatory requirements. A single slip-up can lead to significant legal and financial repercussions, not to mention irreversible damage to your reputation. For most MSPs, the risk and the continuous investment required to maintain top-tier security and compliance standards are simply too high a barrier. It’s not just about building a cloud; it’s about constantly defending it in a landscape where threats are always one step ahead.

What You Should Do Instead

I’m hoping you’ve decided not to build your own cloud. I won’t hold it against you if you attempt it, but I think writing this article saved you some pain and misery. If you’ve decided (based on the above) that building a cloud isn’t really your thing, you’re likely wondering what you should do instead. The answer really boils down to two options.

Resell a public cloud platform – This is likely the most obvious. Reselling a public cloud platform can not only be quite lucrative but can save your engineers (and customers) a lot of time and headaches! The big vendors all have reseller programs. Links below:

Host a pre-built cloud appliance in your datacenter. While this won’t address some of the geographical concerns mentioned above, it does give you something of a happy medium between building your own and selling someone else’s cloud. This option allows you to host a cloud that has already been vetted and built and retain some control of the hardware. The only solution that really fits into this category (that I’m personally aware of) is Azure Stack.

Azure Stack is basically all the power of Azure in your own datacenter. It comes in a pre-built appliance format and is partially maintained by Microsoft on your behalf. If you’re looking for more information on Azure Stack, you can find the official site here, or you can also view the website of Thomas Maurer.

Wrap-Up

I could go on and on with more reasons, but the point is that building a cloud that is on par with the big guys is unrealistic. Sure, the public cloud may seem expensive, but the big cloud providers have figured out all the stuff mentioned above and more. Not to mention, they’ve figured it out at scale. So, if the main goal for your business is simply providing IT services to your customers, then why are you trying to build a cloud? Instead of building a cloud, why don’t you serve your customers well, you’ll quickly hit a business ceiling even if you do manage to produce your own service? Focus on your core business of serving your customers and sell someone else’s cloud instead. Agree? Disagree? Have you made the attempt and failed/succeeded?

Thanks for reading!

by Luke Orellana | Jun 28, 2018 | Managed Service Providers

Let’s face it, no one likes Windows Updates – least of all Managed Service Providers. However, there is a way to make the process less tedious: through automation.

For MSPs, managing Windows Updates for clients is always messy. No matter what patch management solution you use, it is inevitable that Windows Updates will still cause you headaches. Whether there is a bad patch that gets rolled out to a number of clients or the never-ending burden of having to troubleshoot devices where Windows Patches just aren’t installing properly.

Luckily, with the magic of PowerShell and the help of the PowerShell module PSWindowsUpdate, we can manage Windows updates in an automated fashion, allowing us to develop scripts that ease some of our Windows Update pains.

How to Install PSWindowsUpdate

PSWindowsUpdate was created by Michal Gajda and is available via the PowerShell Gallery, which makes installation a breeze. To install PSWindowsUpdate, all we have to do, if we are running a Windows 10 OS, is open up a PowerShell cmd prompt and type in the following syntax:

Install-Module -Name PSWindowsUpdate

If we want to save this module and put it on a network share so that other servers can import and run this module, then we will use the save-module cmdlet:

Save-Module -Name PSWindowsUpdate -Path

Using PSWindowsUpdate

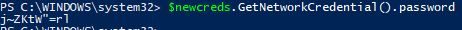

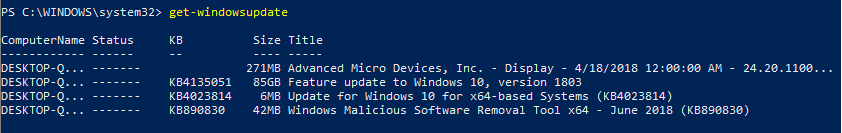

Now that we have the module installed, we can run Windows Updates via PowerShell. We can perform numerous actions with this module, but for this post, we will go over the basics. We can use the Get-WindowsUpdate cmdlet to fetch a list of available updates for our machine. In my example, I have the module installed on a workstation, and I run the following syntax to get the list of Windows updates applicable to my machine:

Get-WindowsUpdate

Now that I can see what updates my machine is missing, I can use the -Install parameter to install the updates. If I wanted to install all available updates and automatically reboot afterward, I would use the -autoreboot parameter. The syntax looks like this:

Now that I can see what updates my machine is missing, I can use the -Install parameter to install the updates. If I wanted to install all available updates and automatically reboot afterward, I would use the -autoreboot parameter. The syntax looks like this:

Get-WindowsUpdate -install -acceptall -autoreboot

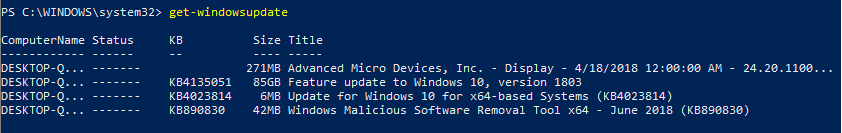

If i want to just install a specific KB, I can use the -KBArticleID parameter and specify the KB Article Number:

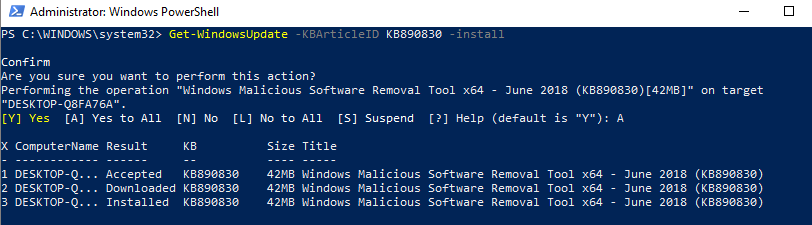

Get-WindowsUpdate -KBArticleID KB890830 -install

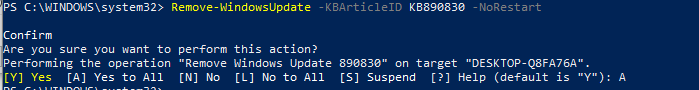

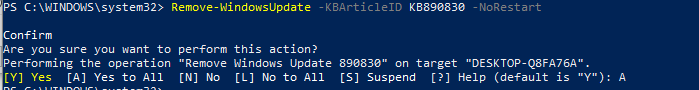

In the screenshot, you can see that we get a confirmation prompt. Then, each update step is listed as it occurs. If we wanted to remove an update from a machine, we could use the Remove-WindowsUpdate cmdlet and specify the KB with the -KBArticleID parameter. I will use the -norestart parameter so the machine does not get rebooted after the patch is uninstalled:

In the screenshot, you can see that we get a confirmation prompt. Then, each update step is listed as it occurs. If we wanted to remove an update from a machine, we could use the Remove-WindowsUpdate cmdlet and specify the KB with the -KBArticleID parameter. I will use the -norestart parameter so the machine does not get rebooted after the patch is uninstalled:

Remove-WindowsUpdate -KBArticleID KB890830 -NoRestart

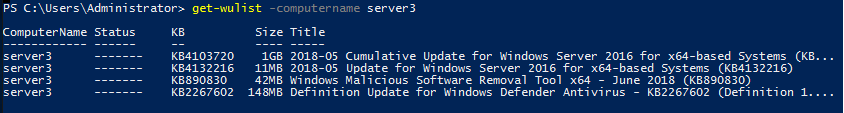

If we want to check available Windows updates remotely from other computers, we can simply use the -ComputerName parameter:

If we want to check available Windows updates remotely from other computers, we can simply use the -ComputerName parameter:

Continuous Update Script

PSWindowsUpdate can be used in deployment scripts to ensure Windows is completely up to date before being placed in production. Below, I have created a script that will deploy all available Windows updates to a Windows Server and restart when it is complete. Right after the restart is done, the update process can be started again and repeat itself until there are no more Windows updates left.

This is a very useful script to use for VM deployments. Unless you have the time to ensure your VM templates are always up to date every month, there will almost always be Microsoft Updates to install when deploying new Windows Virtual Machines. Also, ensuring that all available Windows Updates on a system are installed can be a very time-consuming task, as we all know Windows Updates isn’t the fastest updater in the world.

This script requires you to run it from an endpoint that has the PSWindowsUpdate module installed. It also should be run with an account with local administrator permissions to the remote server it is managing Windows updates on. Essentially, it will install PSWindowsUpdate on the remote server via PowerShell get and will use the cmdlet Invoke-WUJob, which uses a task scheduler to control Windows updates remotely.

We have to use Task Scheduler because there are certain limitations with some of the Windows Update methods that prevent them from being called from a remote computer.

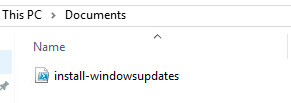

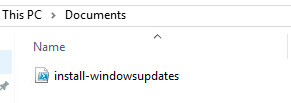

Copy this script to a notepad and save it as a .ps1. I save mine as install-windowsupdates.ps1:

<#

.SYNOPSIS

This script will automatically install all avaialable windows updates on a device and will automatically reboot if needed, after reboot, windows updates will continue to run until no more updates are available.

.PARAMETER URL

User the Computer parameter to specify the Computer to remotely install windows updates on.

#>

[CmdletBinding()]

param (

[parameter(Mandatory=$true,Position=1)]

[string[]]$computer

)

ForEach ($c in $computer){

#install pswindows updates module on remote machine

$nugetinstall = invoke-command -ComputerName $c -ScriptBlock {Install-PackageProvider -Name NuGet -MinimumVersion 2.8.5.201 -Force}

invoke-command -ComputerName $c -ScriptBlock {install-module pswindowsupdate -force}

invoke-command -ComputerName $c -ScriptBlock {Import-Module PSWindowsUpdate -force}

Do{

#Reset Timeouts

$connectiontimeout = 0

$updatetimeout = 0

#starts up a remote powershell session to the computer

do{

$session = New-PSSession -ComputerName $c

"reconnecting remotely to $c"

sleep -seconds 10

$connectiontimeout++

} until ($session.state -match "Opened" -or $connectiontimeout -ge 10)

#retrieves a list of available updates

"Checking for new updates available on $c"

$updates = invoke-command -session $session -scriptblock {Get-wulist -verbose}

#counts how many updates are available

$updatenumber = ($updates.kb).count

#if there are available updates proceed with installing the updates and then reboot the remote machine

if ($updates -ne $null){

#remote command to install windows updates, creates a scheduled task on remote computer

invoke-command -ComputerName $c -ScriptBlock { Invoke-WUjob -ComputerName localhost -Script "ipmo PSWindowsUpdate; Install-WindowsUpdate -AcceptAll | Out-File C:\PSWindowsUpdate.log" -Confirm:$false -RunNow}

#Show update status until the amount of installed updates equals the same as the amount of updates available

sleep -Seconds 30

do {$updatestatus = Get-Content \\$c\c$\PSWindowsUpdate.log

"Currently processing the following update:"

Get-Content \\$c\c$\PSWindowsUpdate.log | select-object -last 1

sleep -Seconds 10

$ErrorActionPreference = ‘SilentlyContinue’

$installednumber = ([regex]::Matches($updatestatus, "Installed" )).count

$Failednumber = ([regex]::Matches($updatestatus, "Failed" )).count

$ErrorActionPreference = ‘Continue’

$updatetimeout++

}until ( ($installednumber + $Failednumber) -eq $updatenumber -or $updatetimeout -ge 720)

#restarts the remote computer and waits till it starts up again

"restarting remote computer"

#removes schedule task from computer

invoke-command -computername $c -ScriptBlock {Unregister-ScheduledTask -TaskName PSWindowsUpdate -Confirm:$false}

# rename update log

$date = Get-Date -Format "MM-dd-yyyy_hh-mm-ss"

Rename-Item \\$c\c$\PSWindowsUpdate.log -NewName "WindowsUpdate-$date.log"

Restart-Computer -Wait -ComputerName $c -Force

}

}until($updates -eq $null)

"Windows is now up to date on $c"

}

Now that you have your .ps1 file created, remember the location where you saved it:

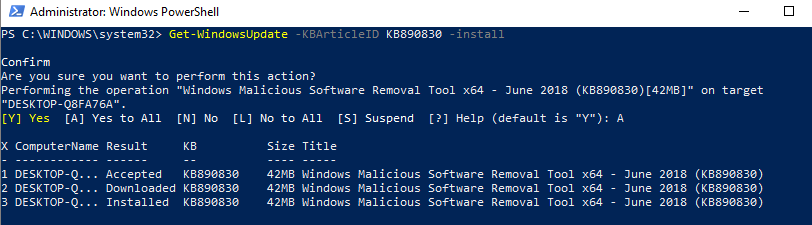

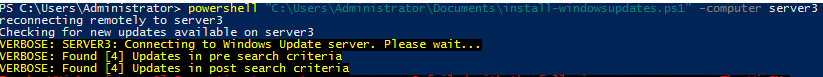

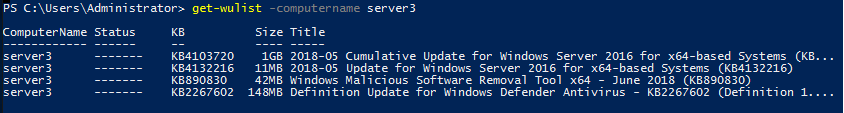

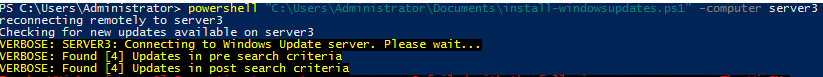

We will open up an administrative PowerShell console and type in the following command in order to deploy all available windows updates on our server “Server3”:

We will open up an administrative PowerShell console and type in the following command in order to deploy all available windows updates on our server “Server3”:

PowerShell "C:\users\Administrator\Documents\install-windowsupdates.ps1" -computer Server3

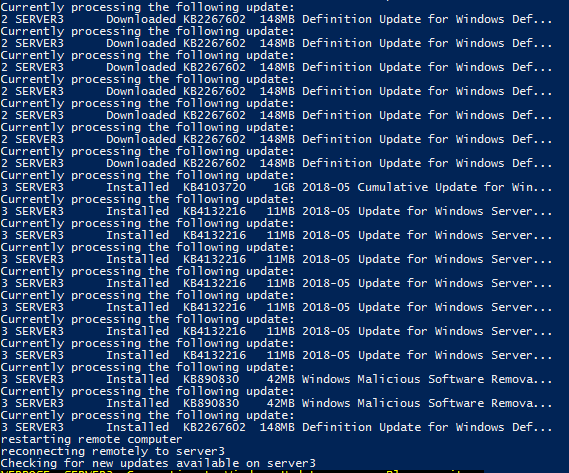

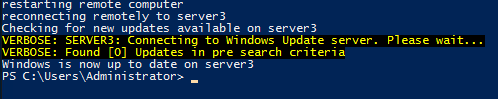

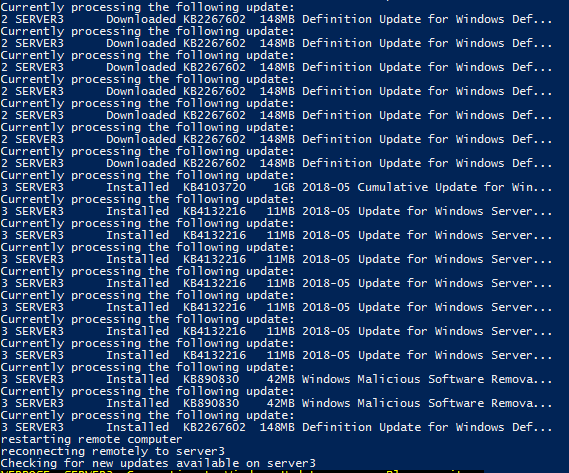

A remote connection is established with Server3 and we install the module remotely. Then we perform a get-windowsupdate to get a list of any available windows updates. Then we invoke the server to install those updates:

A remote connection is established with Server3 and we install the module remotely. Then we perform a get-windowsupdate to get a list of any available windows updates. Then we invoke the server to install those updates:

You can see in the screenshot that after the updates are installed and the server is rebooted, the script picks back up again and starts the process of verifying if there are any new updates available. If there are, it will run through the steps of installing them again. Once there are no more available updates, the script will stop:

You can see in the screenshot that after the updates are installed and the server is rebooted, the script picks back up again and starts the process of verifying if there are any new updates available. If there are, it will run through the steps of installing them again. Once there are no more available updates, the script will stop:

Wrap-Up

As you can see, this method is quite useful in several different situations. It’s simply another tool you can add to your toolbox to help your team assist your customers. More efficient operations are a good thing for everyone!

What about you? Do you have Windows Update stories to share? Any particularly favorite workarounds? Has this post and script been useful to you?

Also, I’ve written a lot of automation for MSPs here. If you like this, check out the following blog posts:

Automation for MSPs: Getting started with Source Control

Automation for MSPs: HTML Tables

Automation for MSPs: Advanced PowerShell Functions

Thanks for reading!

by Nick Cavalancia | Jun 22, 2018 | Managed Service Providers

How quickly do you assess a new customer’s needs? Making a fast and accurate judgment of a customer’s needs is a hugely important first step in any business relationship. The following steps will ensure you can figure out what a customer’s customized backup service should look like within your first meeting.

Successful service providers tend to keep their services tightly wound around a common service definition and rarely allow one-off services. It’s unpredictable and can be less than profitable. But one service does require some degree of flexibility when it comes to specific execution – backups. Backups are the one service that revolves around what the customer already has in place and what they need to be delivered to work alongside that existing environment – instead of the usual “you put it in place, so that is what they need to be delivered.”

Most service providers start with the tech they have in place – the backup solution, storage mediums, use of the cloud, etc. – and then build a service offering around that. But, unless you focus on the exact same type and size of customer, every customer has different needs regarding how they define what it takes to keep them operational. For some, it’s merely maintaining Internet access to connect to SaaS applications. For others, it’s manufacturing systems that need to be running. And for an entirely different group, it’s access to on-prem files and databases.

You also need to be aware that your customer’s needs may vary while your offering remains static. This creates what’s known as a Recovery Gap. Simply put, the recovery gap is the distance between your customer’s recovery needs and your ability to deliver. If your backup offering is static, there is most likely to exist some degree of a gap.

So, how do you define your customer’s backup needs and eliminate that recovery gap?

Below is a simple 5-step plan that starts with the business recovery needs and works backward to the backups needed.

The 5 Steps

Step 1: Start with Business Needs

Begin with how the business defines being operational. This should include key services, systems, applications, and data deemed anywhere from important to critical. To best ensure you and the customer are looking at this through the same lens, inquire how long the business can be without each key operational element, as well as ask how the loss impacts the business. This could be things like core manufacturing applications for a factory or a medical office’s patient records program. What does the business NEED to remain operational?

Step 2: Identify Recovery Objectives

Define recovery time (RTO) and recovery point objectives (RPO) for each element identified in Step 1. In this step, you put objective parameters around the somewhat subjective Step 1 definitions of what’s “critical.” Remember, the RTO defines how quickly your customer needs to recover, and the RPO defines how much data (usually a period of time) they can lose and still remain functional.

Step 3: Create Recovery Tiers

You’ll begin to see patterns around the recovery objectives that will allow you to group certain parts of the environment into recovery tiers. For example, there may be several applications, data sets, and systems that need to be recovered and operational within an hour, while the remainder of the environment can take as long as 24 hours – giving you two recovery tiers. The business owners will start by saying everything is critical, but you’ll find that after talking with them candidly, they’ll settle pretty quickly on their most critical systems.

Step 4: Build a Backup Strategy

Build your backup strategy around the recovery needs as defined in Step 3. In a perfect MSP world, this is merely an exercise in properly defining the backups and their frequency. But in some cases, you may learn that your recovery gap requires additional protective measures – such as the use of offsite backup storage to the cloud – to ensure a needed system can recover within the needed timeframe.

In this case, you may need to rethink your service definitions and expand them to include additional infrastructure and software in order to meet your customer’s needs. Additionally, what you’ll find working through this exercise is that finding these gaps for one customer may help you later on down the road.

Step 5: Continuously Monitor and Adjust

The final step is to establish a process for ongoing monitoring and adjustment of your backup strategy. The business environment, as well as technology, is continually evolving. What was a sufficient backup strategy a year ago might not meet the current needs of the business today. Implement a system of regular reviews with your customer to assess and update recovery objectives, tiers, and strategies.

This might involve adopting new technologies, adjusting backup frequencies, or re-evaluating critical systems as the business grows or changes direction. This proactive approach ensures your backup services remain closely aligned with your customer’s needs, reducing the likelihood of a recovery gap re-emerging and maintaining a high level of customer satisfaction.

Additional Steps

Not listed here (but should be mentioned in the interest of being thorough) is a Testing step. It’s great to make a great plan that you believe will work for your customer; to be sure, you need to test whatever changes you make to close the recovery gap.

How this Works in Practice

As an example, let’s imagine a 24/7 medical clinic. They have approached you to provide backup services. To date, you’ve provided simple file-based backups to your customers. Upon talking about the clinic’s recovery needs, you find that any outage the clinic suffers must be resolved within 4 hours (4-hour RTO).

The clinic’s patient records database is quite large at 2TBs in size. A traditional file-based recovery would be hard-pressed to fill this need. With this realization, you’ve already established a recovery gap. With this information, you then procure a backup application that has the ability to boot workloads from the backup storage, thus filling the clinic’s 4-hour requirement.

While this is a very simplistic example and only talks about a single recovery tier, you get the idea of how this process can help you find gaps in your offering at the beginning of the working relationship so you can start off in the right manner. As we have shown in other blog posts here on the MSP Dojo e.g. 4 Common BaaS Pitfalls MSPs Definitely Must Avoid or How to Build a Successful Managed Services Team, getting the foundation right from the start is so important for your future endeavors.

By following these tips, you’ll be in a much better position to focus on delivering exactly the service your customers require and delight them in its delivery.

Wrap-Up

Just because you’ve always done backups the same way for as long as you can remember doesn’t mean it meets your customers’ needs. The 5 steps above will better define your backups around what the customer requires.

More than likely, it won’t impact your service definitions enough that you feel like every customer is a one-off service. But it will impact your ability to make the customer very happy when you recover exactly what they need in the timeframe they need it, should a disaster strike.

What are your thoughts? Do you find it fairly easy to track down recovery gaps in your offerings?

by Nick Cavalancia | Jun 14, 2018 | Managed Service Providers

The one constant in IT is that it’s constantly evolving. New technologies and their associated certifications are constantly rolled out, so how can you keep up? You can’t possibly pursue all IT certifications, so which ones should you choose? This checklist will simplify your certification process and ensure your engineers are at the top of their game!

As a service provider, MSPs must stay current with technology, security, and service delivery that meets the demands of your customers. One of the key ways to accomplish this is to get your engineering team certified. There are literally hundreds of certifications available to your team. So, which certifications will make you the most competitive, current, and able to meet customer needs? There are two ways to answer this:

- The certifications you need your team to have are based on the services you currently deliver.

- The certifications the industry demands indicate what services you should be offering.

As someone who wants your business to be successful, I want to ensure you’re moving with the times. So, I’m going to focus on the latter of the two in order to position your business to be ready to meet the needs of its clients.

You definitely need to think about this from the perspective that I’m not advocating that you abandon all the services you’ve built and just move to some new services because a certification says so; I’m simply pointing out that the industry is moving, and you need to be right there with it. As you’ll see, many of these certifications already fit into your services wheelhouse, with a few pushing you forward.

The 8 Most Important IT Certifications Topics for MSP Engineers

According to CIO Magazine, the most valuable certifications today (again, which align with services demand) fall into eight distinct service areas:

1) Security

A top priority for any customer organization of any size is security. Most MSPs are looking for ways to offer security-related services, including transitioning to an MSSP. A few specific security-focused certifications are in demand: 3 from ISACA and one from ISC2 – both well-respected IT-centric organizations that seek to improve the quality of the delivery of IT services. ISACA’s certification includes Certified in Risk and Information Systems Control (CRISC), Certified Information Security Manager (CISM), Certified Information Systems Auditor (CISA). ISC2 offers the well-known Certified Information Systems Security Professional (CISSP).

2) Cloud

Those customers looking to shift their operations to the cloud are looking for partners who not only understand how to administer cloud-based environments but also have staff that has expertise in designing custom cloud solutions.

IT certifications such as Amazon’s AWS Certified Solutions Architect – Associate is in high demand due to the success of AWS. And, for those service providers focused on Azure, Microsoft also offers several technical and solutions-focused certifications for Azure.

3) Virtualization

You already likely have your customers running much of their infrastructure and critical systems virtually. Having a certified team member who fully understands the architecting and management of virtual environments will help both on-prem and as you move customers to the cloud.

Both Citrix and VMware certifications are in demand. Citrix’s Citrix Certified Professional – Virtualization (CCP-V) and Citrix Certified Associate – Virtualization (CCA-V), along with VMware’s VMware Certified Professional 6 – Data Center Virtualization (VCP6-DCV) round out the list.

4) Networking

The foundation of the environments you manage is the network itself. Existing both physically and virtually today, it’s necessary to have staff who understand both fully.

While nearly any networking-related certifications are certainly helpful to get the job done, those in the highest demand include the Cisco Certified Network Professional (CCNP) Routing and Switching and Citrix Certified Associate – Networking (CCA-N).

5) Project Management

It’s not all about keeping technology running; many of your one-off projects require a significant amount of planning, tracking, and management.

That’s why one of the most in-demand areas of certification includes those demonstrating a mastery of project management. The Project Management Institute’s Project Management Professional (PMP) and CompTIA’s Project + certifications provide the skills needed to ensure projects are properly managed.

6) Service Management

Most of your engineering team is heads-down, putting out fires. They’re thinking solely about the here and now. But it’s important to have at least one member of the team who is thinking about the service lifecycle, helping to mature your offerings.

The ITIL v3 Foundation certification focuses on the ITIL service lifecycle, the ITIL phase interactions, and outcomes, as well as IT service management processes and best practices.

7) Data Privacy and Compliance

Understanding and implementing data privacy and compliance is more critical than ever. The Certified Information Privacy Professional (CIPP) is a globally recognized certification that equips professionals with the knowledge of privacy laws and regulations. It varies by region to reflect different legal frameworks, making it essential for those ensuring organizational compliance with global privacy laws.

Alongside, the Certified Information Privacy Manager (CIPM) is the world’s first and only certification in privacy program management. It focuses on equipping professionals with the necessary skills to establish, maintain, and manage a privacy program throughout all stages of its lifecycle, from inception to implementation and beyond.

8) Cybersecurity Analysis

As the landscape of cyber threats evolves, so does the need for skilled cybersecurity analysts. The Certified Ethical Hacker (CEH) certification provides IT professionals with the knowledge and tools to look for system weaknesses and vulnerabilities. It’s about understanding and using the same techniques as a malicious hacker, but legally and ethically. This certification delves into a wide range of hacking techniques and their legal implications, making it a comprehensive choice for security professionals.

Complementing this is the CompTIA Cybersecurity Analyst (CySA+), which emphasizes behavioral analytics to detect and combat cybersecurity threats. It focuses on proactive defense using monitoring and data analysis, training individuals to identify and combat malware and advanced persistent threats (APTs), resulting in enhanced threat visibility across a broad attack surface.

Summary

The first four service areas are likely very familiar to you already, with the last four being, perhaps, a bit foreign. Regardless, these IT certification areas (as well as some of the specific IT certifications mentioned) represent the knowledge needed to service MSP customers’ needs properly.

Take a look at the links above and begin a plan with your engineering team to get them certified. By doing so, you’ll have a stronger team that can take on bigger challenges, larger clients, and longer projects – all making your organization better positioned to grow. Training your employees is also a great way to foster trust and improve retention (however, make sure you still plan what to do if you lose a key engineer!)

What about your team? Are you following a certification path similar to this one? Are there other certifications you are pursuing outside of these for something more specific? What are they, and what is the reasoning?

Now when we run a quick Get-Command search, we can see we have our new KeePass functions and we’re ready to go!:

Now when we run a quick Get-Command search, we can see we have our new KeePass functions and we’re ready to go!:

Now we will use the New-KeePassDatabaseConfiguration cmdlet to set up a profile for the connection to the .kdbx file. We will also use the -UserMasterKey parameter to specify that this KeePass database is set up to use a Master Key. There several different ways of configuring authentication to a KeePass database, but for the purpose of this demo we are going to make it simple and use a MasterKey which is just a password that is used to access the database:

Now we will use the New-KeePassDatabaseConfiguration cmdlet to set up a profile for the connection to the .kdbx file. We will also use the -UserMasterKey parameter to specify that this KeePass database is set up to use a Master Key. There several different ways of configuring authentication to a KeePass database, but for the purpose of this demo we are going to make it simple and use a MasterKey which is just a password that is used to access the database:

Now let’s upload a new entry to our KeePass database. Let’s say we are deploying an ESXi host and want to generate a random password and save it to our KeePass database. We will use the New-KeePassEntry cmdlet and specify our profile “LukeLab” that we set up earlier. We are also going to use our $password variable as the password profile that we want to use for the password complexity requirements. Then we get prompted for our Master Key password:

Now let’s upload a new entry to our KeePass database. Let’s say we are deploying an ESXi host and want to generate a random password and save it to our KeePass database. We will use the New-KeePassEntry cmdlet and specify our profile “LukeLab” that we set up earlier. We are also going to use our $password variable as the password profile that we want to use for the password complexity requirements. Then we get prompted for our Master Key password:

Now, when we open up our password database we can see our new entry and a randomly generated password:

Now, when we open up our password database we can see our new entry and a randomly generated password:

Now when we look at our password entry for ESXi1 we can see it has been updated with a new password:

Now when we look at our password entry for ESXi1 we can see it has been updated with a new password:

Now let’s update our ESXi system by obtaining the secure string from the new Entry and changing the password on the ESXi Host.

We save our entry to a variable again:

Now let’s update our ESXi system by obtaining the secure string from the new Entry and changing the password on the ESXi Host.

We save our entry to a variable again:

So now we can use this variable to create a credential object and pass that along to a script and change the ESXi password to this new password:

So now we can use this variable to create a credential object and pass that along to a script and change the ESXi password to this new password:

And, just to prove it, I can use the GetNetworkCredential method and show the decoded password is the same as that is in our KeePass:

And, just to prove it, I can use the GetNetworkCredential method and show the decoded password is the same as that is in our KeePass:

There are now two editions of the Universal Dashboard. The edition that costs money to run is the ” Enterprise Edition”, which must be licensed or it will only run for 1 hour. To install open up an administrative PowerShell and type in the following command to install the module:

There are now two editions of the Universal Dashboard. The edition that costs money to run is the ” Enterprise Edition”, which must be licensed or it will only run for 1 hour. To install open up an administrative PowerShell and type in the following command to install the module:

Now we can run our entire script either in ISE, PS console, or in my case Visual Studio Code. Then to see the results our our code, we can open up a browser and go to http://localhost:1000. Now we can see the dashboard that we just created:

Now we can run our entire script either in ISE, PS console, or in my case Visual Studio Code. Then to see the results our our code, we can open up a browser and go to http://localhost:1000. Now we can see the dashboard that we just created:

So in as little as 5 minutes we already have a web hosted GUI running. To stop running this dashboard, we can type in the following command:

So in as little as 5 minutes we already have a web hosted GUI running. To stop running this dashboard, we can type in the following command:

*Above Image from

*Above Image from

We will open up an administrative PowerShell console and type in the following command in order to deploy all available windows updates on our server “Server3”:

We will open up an administrative PowerShell console and type in the following command in order to deploy all available windows updates on our server “Server3”:

A remote connection is established with Server3 and we install the module remotely. Then we perform a get-windowsupdate to get a list of any available windows updates. Then we invoke the server to install those updates:

A remote connection is established with Server3 and we install the module remotely. Then we perform a get-windowsupdate to get a list of any available windows updates. Then we invoke the server to install those updates: