Creating Web Scraping Tools for MSPs with PowerShell

Building a web scraping tool can be incredibly useful for MSPs. Sometimes, there isn’t always an API or PowerShell cmdlet available for interfacing with a web page. However, there are other tricks we can use with PowerShell to automate the collection and processing of a web page’s contents.

This can be a huge time saver for instances where collecting and reporting on data from a web page can save employees or clients hundreds of hours. Today, I’m going to show you how to build your own Web Scraping tool using PowerShell. Let’s get started!

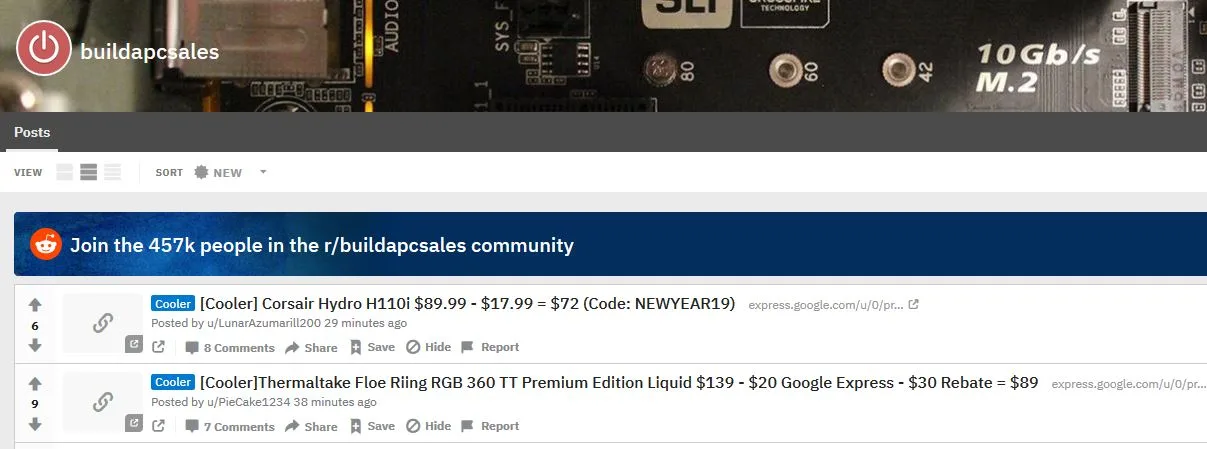

We are going to scrape the BuildAPCSales subreddit. This is an extremely useful web page as many users contribute to posting the latest deals on PC parts. As an avid gamer such as myself, this would be extremely useful to check routinely and report back on any deals for the PC parts I’m looking for.

Also, because of the limited amount of stock for some of these sales, it would be extremely beneficial to know about these deals as soon as they are posted. I know there is a Reddit API available that we could use to interface with but to demonstrate making a web scraping tool, we are not going to use it.

Web Scraping with Invoke-WebRequest

First, we need to take a look at how the website is structured. Web Scraping is an art since many websites are structured differently; we will need to look at the way the HTML is structured and use PowerShell to parse through the HTML to gather the info we are looking for. Let’s take a look at the structure of BuildAPCSales. We can see that each Sale is displayed with a big header that contains all the info we want to know, the item and the price:

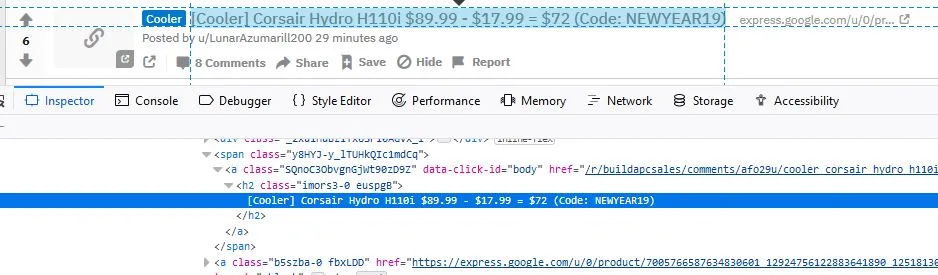

Now, let’s use the Web Developer tool in our browser to inspect the HTML portion of these posts further. I am using Firefox in this example. I can see that each Post is tagged in HTML with the “h2” tag.

Let’s try scraping all of our “h2” tags and see what we come up with. We will use the Invoke-WebRequest PowerShell cmdlet and the URL to the Reddit webpage and save it as a variable in order to collect the HTML information for parsing:

$data = invoke-webrequest -uri "https://www.reddit.com/r/buildapcsales/new/"

Now we are going to take our new variable and parse through the HTML data to look for any items tagged as “h2”. Then we will run through each object and display the “innertext” content which is the text content of the tag we are searching for:

$data.ParsedHtml.all.tags("h2") | ForEach-Object -MemberName innertext

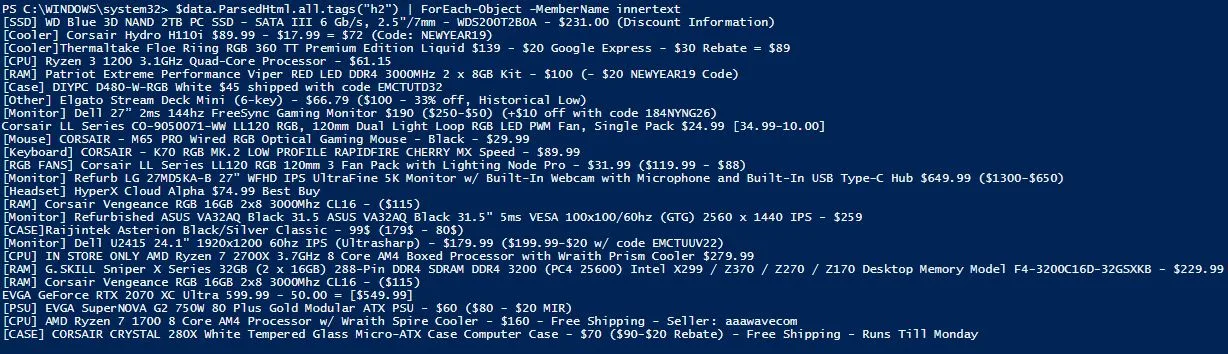

Yay, it worked! We are able to collect all the deals posted:

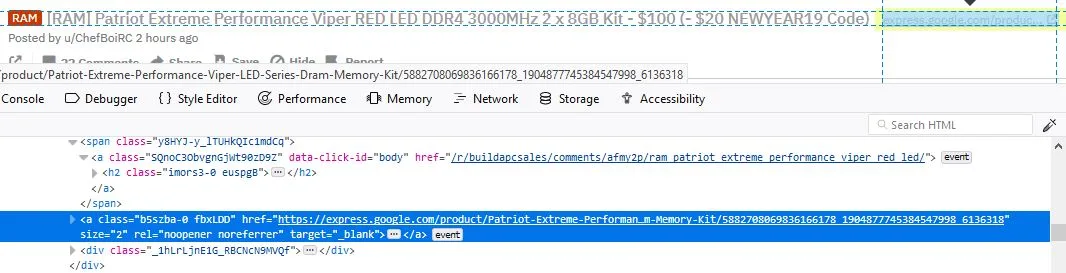

I like what we have so far, but I don’t only want the post headings but also the links for each sale. Let’s go back and look at the webpage formatting and see what else we can scrape from it to get the links. When using the inspection tool in Firefox (CTL + SHIFT + C) and clicking on one of the sale links, I can see the HTML snippet for that post:

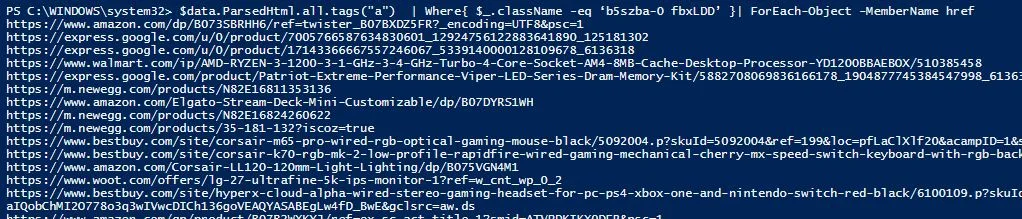

It looks like these are tagged as “a,” which defines a hyperlink in HTML. So we want to run a search for all HTML objects tagged as an “a,” and we’ll want to output the “href” for these instead of the “innertext” as we did in the example above. But this would give us all hyperlinks on this page. We need to narrow down our search more to only pull the links that are for sales. Inspecting the web page further, I can see that each sale hyperlink has the class name “b5szba-0 fbxLDD”. So, we’ll use this to narrow our search:

$data.ParsedHtml.all.tags("a") | Where{ $_.className -eq ‘b5szba-0 fbxLDD’ }| ForEach-Object -MemberName href

Now we have the links to the items for each post. We now have all the information we are looking for:

Processing Our Web Information

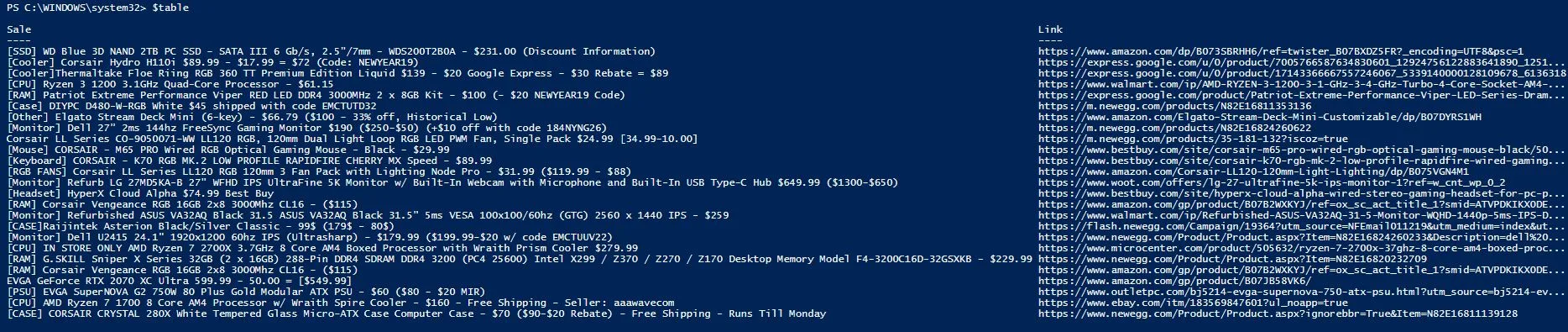

Now that we have the information we want, we need to process it, I would like to create a table for each sale and its respective link. We can do this by using the following syntax:

$data = invoke-webrequest -uri "https://www.reddit.com/r/buildapcsales/new/" $Sales = $data.ParsedHtml.all.tags("h2") | ForEach-Object -MemberName innertext $Links = $data.ParsedHtml.all.tags("a") | Where{ $_.className -eq ‘b5szba-0 fbxLDD’ }| ForEach-Object -MemberName href Foreach ($Sale in $Sales)

{

$index = $sales.IndexOf($sale)

$row = new-object -TypeName psobject

$row | Add-Member -MemberType NoteProperty -Name Sale -Value $sale

$row | Add-member -MemberType NoteProperty -Name Link -Value $links[$index]

[array]$table += $row

}

When we go to look at our $table, we can see the correct info:

Taking It Further

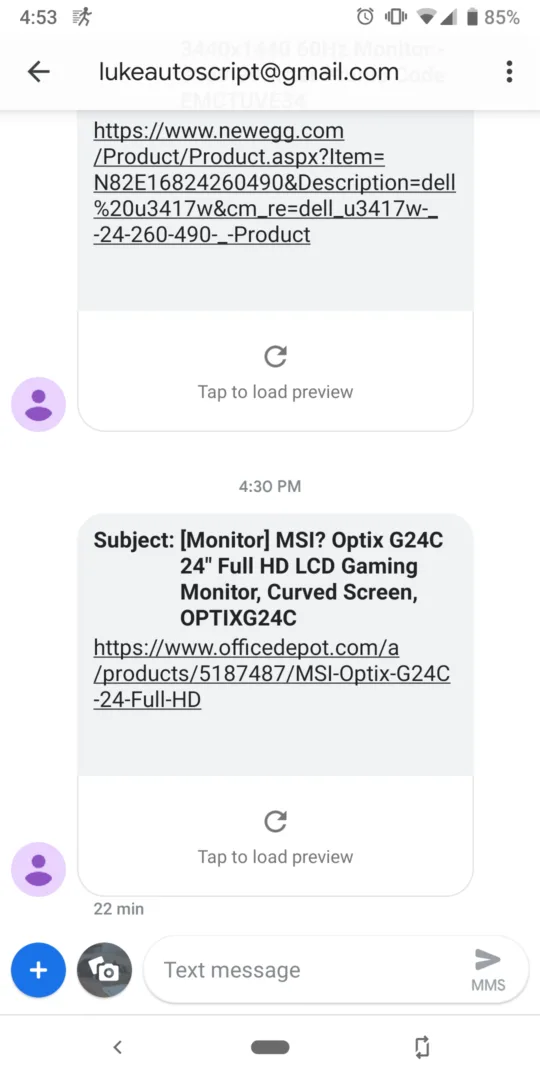

Now, let’s take it a step further and make this web scraping script useful. I want to be notified by text if there is a specific sale for a PC component that I’m looking for. Currently, I’m searching for a good 144hz monitor. So, to get notified of the best deals, I created a script that will run as a scheduled task on my computer every 15 minutes.

It will scrape the Reddit web page for any monitor deals and notify me of the deal via text. Then, it will make a note of the deals that have been sent to me in a text file to ensure that I’m not getting spammed repeatedly with the same deal. Also, since I don’t have an SMTP server at my house, I’ve set up a g-mail account to send email messages via PowerShell. Since I want to receive these alerts via text and not email, I am sending the email alerts to my phone number, which can be done with each popular carrier.

I’m using Google Fi, so I just simply put in my phone number with @msg.fi.google.com, and the email goes right to my phone as a text. I’ve also encrypted my g-mail account password into a file using the process outlined in our blog post about encrypted passwords in PowerShell. After everything’s done, the syntax will look like this:

#Edit this to change the string to web scrape for

$PCPart = "Monitor]"

#Edit this to change the email address to send alerts to

$EmailAddress = "1234567890@msg.fi.google.com"

#Collect information from web page

$data = invoke-webrequest -uri "https://www.reddit.com/r/buildapcsales/new/"

#filter out headers and links

$Sales = $data.ParsedHtml.all.tags("h2") | ForEach-Object -MemberName innertext

$Links = $data.ParsedHtml.all.tags("a") | Where{ $_.className -eq ‘b5szba-0 fbxLDD’ }| ForEach-Object -MemberName href

#create table including the headers and links

Foreach ($Sale in $Sales)

{

$index = $sales.IndexOf($sale)

$row = new-object -TypeName psobject

$row | Add-Member -MemberType NoteProperty -Name Sale -Value $sale

$row | Add-member -MemberType NoteProperty -Name Link -Value $links[$index]

[array]$table += $row

}

#analyze table for any deals that include the PC Part string we are looking for

If ($table.Sale -match $PCPart)

{

$SaletoCheck = $table | where-object {$_.sale -match $PCPart}

ForEach($sale in $SaletoCheck)

{

if ((Get-Content C:\scripts\SaleDb.txt) -notcontains $sale.link)

{

#Save link to text file so we don’t send the same deal twice

$sale.link | out-file C:\scripts\SaleDb.txt -Append

#obtain password for gmail account from encrypted text file

$password = Get-Content "C:\Scripts\aespw.txt" | ConvertTo-SecureString

$credential = New-Object System.Management.Automation.PsCredential("lukeautoscript@gmail.com",$password) $props = @{

From = "lukeautoscript@gmail.com"

To = $EmailAddress Subject = $sale.sale

Body = $sale.link SMTPServer = "smtp.gmail.com"

Port = "587"

Credential = $credential

}

Send-MailMessage @props -UseSsl

}

}

}

We wait for a sale for a good monitor to pop up and see our end result:

Wrap-Up

As you can see, web scrapping tools can be incredibly powerful for parsing useful web pages. It opens up so many possibilities to create useful scripts that one might think were not possible. As I said previously, it is an art; a lot of the difficulty depends on how the website is formatted and what information you are looking for.

Feel free to use my script in the demo if you want to configure your own notifications for PC part deals. If you’re curious, I ended up getting a good deal on an Acer XFA240, and the picture looks amazing with 144hz!